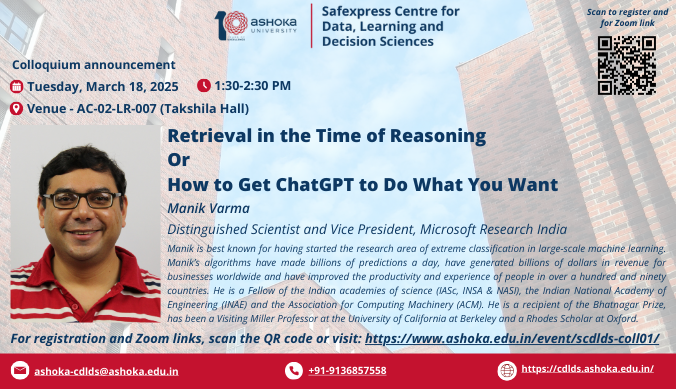

SCDLDS Technical Seminar

by Manik Varma

Distinguished Scientist and Vice President

Microsoft Research India

Manik Varma is a Distinguished Scientist and Vice President at Microsoft Research India and an Adjunct Professor at the Indian Institute of Technology Delhi. He is best known for having started the research area of extreme classification in large-scale machine learning. Manik’s algorithms have made billions of predictions a day, have generated billions of dollars in revenue for businesses worldwide and have improved the productivity and experience of people in over a hundred and ninety countries. Manik is also known for his research on developing tiny classifiers that can fit within 2–16 KB of RAM and run on microcontrollers smaller than a grain of rice. His classifiers have been deployed on hundreds of millions of devices and have protected them from viruses and malware. Manik has served as an Associate Editor-in-Chief of the IEEE Transactions on Pattern Analysis & Machine Intelligence as well as a senior area chair at most of the premiere conferences in machine learning, artificial intelligence and computer vision. He is a Fellow of the Indian academies of science (IASc, INSA & NASI), the Indian National Academy of Engineering (INAE) and the Association for Computing Machinery (ACM). He is a recipient of the Bhatnagar Prize, has been a Visiting Miller Professor at the University of California at Berkeley and a Rhodes Scholar at Oxford.

Abstract:

Large Language Models (LLMs), such as GPT4 and O1, have delivered game-changing reasoning and synthesis capabilities leading pundits to proclaim that we have entered a new age of LLM reasoning. Yet, LLMs can make mistakes while answering simple factual queries, such as “Who did Ashoka University hire most recently?”, and can fail spectacularly at complex tasks such as “Write a 2 page document summarizing the history of Ashoka University”. A peek under the hood reveals that these mistakes are often caused by failures of the retrieval model which is responsible for fetching relevant information from the web and private databases. Such retrieval failures can lead to particularly egregious responses when the information required for completing the task at hand is not present in the LLM itself and needs to be fetched from external sources.

In this talk, I will discuss what the architecture and flow might look like for a retrieval platform for large-scale AI workloads that can significantly reduce such retrieval failures and thereby lead to much better LLM responses. I will also discuss how we can build a state-of-the-art generative retrieval model that forms the core of the platform and which can accurately fetch documents in milliseconds in a cost-effective manner. Finally, I will share some empirical evidence on how such a retrieval model might benefit millions of users around the world. Most parts of my talk should be broadly accessible to a lay audience.