SCDLDS Technical Seminar

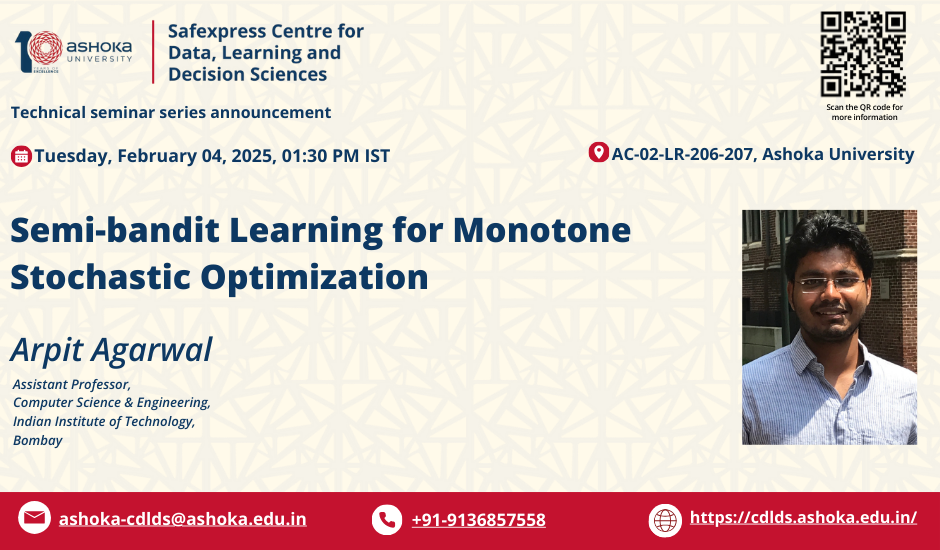

by Arpit Agarwal

Assistant Professor

Computer Science & Engineering

Indian Institute of Technology Bombay

Arpit Agarwal is an Assistant Professor in the Department of Computer Science and Engineering at IIT Bombay. Prior to joining IIT Bombay, he was a postdoctoral researcher at FAIR Labs (Meta), where he worked with Max Nickel on socially responsible recommendation systems. Before that, he was a postdoctoral fellow at the Data Science Institute at Columbia University, hosted by Prof. Yash Kanoria and Prof. Tim Roughgarden. He completed his PhD in the Department of Computer & Information Science at the University of Pennsylvania, under the guidance of Prof. Shivani Agarwal. Arpit’s research lies in the field of machine learning (ML) and artificial intelligence (AI), with a specific focus on the interaction between humans and ML/AI systems. His work spans topics such as learning from implicit, strategic, and heterogeneous human feedback; understanding the dynamics of human-AI interactions and their long-term influences; and designing AI systems responsibly to mitigate undesired consequences for individuals and society.

Abstract: Stochastic optimization is a widely used approach for optimization under uncertainty, where uncertain input parameters are modeled by random variables. Exact or approximation algorithms have been obtained for several fundamental problems in this area. However, a significant limitation of this approach is that it requires full knowledge of the underlying probability distributions. Can we still get good (approximation) algorithms if these distributions are unknown, and the algorithm needs to learn them through repeated interactions? In this paper, we resolve this question for a large class of “monotone” stochastic problems, by providing a generic online learning algorithm with √ T log T regret relative to the best approximation algorithm (under known distributions). Importantly, our online algorithm works in a semi-bandit setting, where in each period, the algorithm only observes samples from the r.v.s that were actually probed. Our framework applies to several fundamental problems in stochastic optimization such as prophet inequality, Pandora’s box, stochastic knapsack, stochastic matchings and stochastic submodular optimization.